AoT in serverless .NET

This is the final entry in this blog series about .NET Core 3.1 projects as functions or serverless applications in AWS Lambda. Probably.

What?

I'll go over making easy, low-hanging fruit type-of gains in .NET Lambda functions cold start performance by leveraging a new dotnet publish flag called ReadyToPublish.

Taken verbatim from the relevant .NET documentation article:

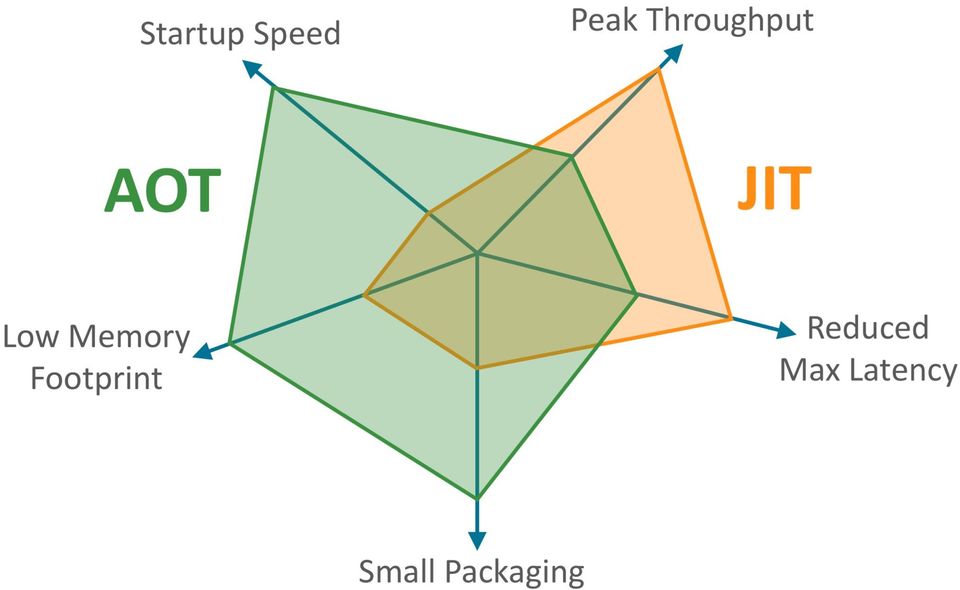

.NET application startup time and latency can be improved by compiling our application assemblies as ReadyToRun (R2R). R2R is a form of ahead-of-time (AOT) compilation.

R2R binaries improve startup performance by reducing the amount of work the just-in-time (JIT) compiler needs to do as your application loads. The binaries contain similar native code compared to what the JIT would produce. However, R2R binaries are larger because they contain both intermediate language (IL) code, which is still needed for some scenarios, and the native version of the same code. R2R is only available when you publish an app that targets specific runtime environments (RID) such as Linux x64 or Windows x64.

To compile your project as ReadyToRun, the application must be published with the PublishReadyToRun property set to true.

Why?

Significantly improved cold start latency, reduced execution duration and (even though a negligible metric by all accounts) a smaller disk size footprint for your .NET functions and serverless applications in AWS Lambda.

These gains depend on whether your project contains large amounts of code or large dependencies like the AWS SDK for .NET. R2R is less effective on small functions using only the .NET Core base library or similar.

As a case study, I picked a serverless application with a lot of third-party dependencies, both NuGet packages as well as internal .NET Standard libraries.

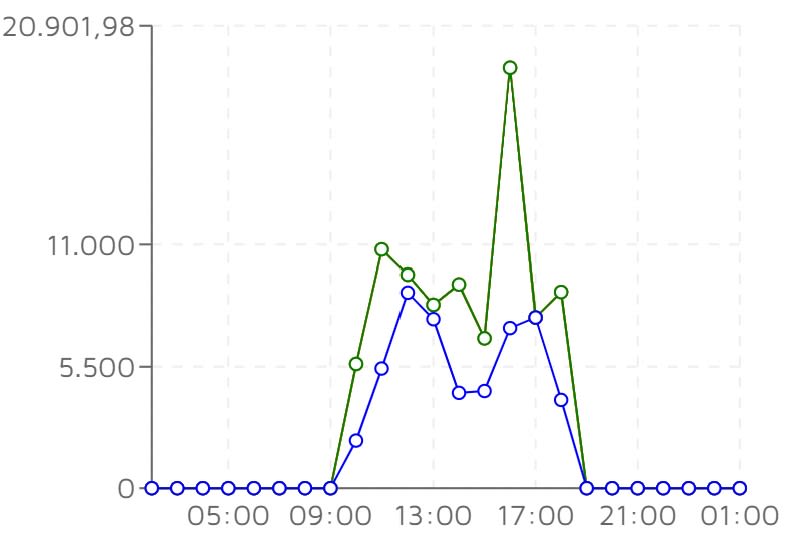

While I don't currently have hard data on cold starts, because I do not currently have a great way to measure cold starts on isolation in our monitoring tool, here's a graph that shows the consistently lower overall execution duration of the same .NET Core 3.1 AWS Lambda function deployed with (blue) compared to without (green) R2R enabled:

Σt = potential cold start time + execution duration

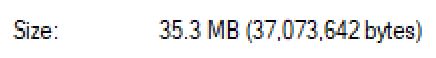

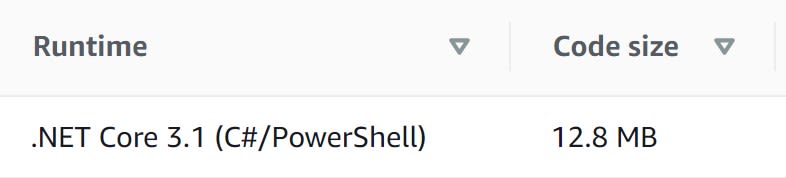

Finally, the size of the release build artifacts on Windows:

versus on Linux with R2R enabled:

How?

All one needs to do to take advantage of the R2R publish feature for packaging .NET projects to AWS Lambda is (plot twist: yes, I am developing on Windows): dotnet publish -c Release -r win-x64 -p:PublishReadyToRun=true, right?

AWS Lambda containers run on Amazon Linux 2 OS so if we want a subset of our .NET project's code to run JIT-less then our code had better been compiled on an environment with the same runtime identifier: linux-x64 in this case. In my case, that means opening up a Windows Terminal tab on WSL2:

dotnet lambda deploy-function YourProjectName --msbuild-parameters "/p:PublishReadyToRun=true --self-contained false"Finale

.NET 5 is out by now but, as usual, it's not officially supported as an AWS Lambda runtime because it's not a LTS version. We'll have to wait for .NET 6 for that but we can always use a custom runtime to get the latest goodies even now.

One of my writing resolutions for 2021 is to start a series about common serverless use cases and application design patterns.

What are some serverless architecture issues you'd like to discuss and explore in-depth? Let me know in the comments!